Artificial Intelligence System - Product Introduction

The AI Multimodal Large Model System is a deep learning model that combines multiple data modalities (such as text, images, voice, video, etc.), to help enterprises better understand the needs of customers, markets, and risks, thereby improving the intelligence level of their products.

By integrating top-tier models such as OpenAI-GPT5, Deepseek-V3, and Stable Diffusion with GTS’s in-house agents and workflow engine, the team delivers fully customized development solutions based on AIGC multimodal large models.

Openai-GPT5

Deepseek-V3

Stable Diffusion

AI Multimodal Large Model Application - Existing Challenges

Data Privacy and Cross-border Compliance Issues

Public cloud APIs may send user inputs back to providers’ training pools, and financial or medical data crossing borders can breach industry regulations.

Complex Integrating and Fusion of Heterogeneous Data Sources

Text, images, audio, and video have vastly different distributions in vector space, making the integration and fusion of data a significant challenge.

Imbalance Between Computing Cost and Return

Training a model with hundreds of billions of parameters requires tens of thousands of A100 hours. The enormous resources consumed in training and inference for large-scale AI models result in a disproportionate ROI for enterprises in the long run.

The Gap in Cross-Modal Inference and Training Challenges

Traditional splicing fusion methods lead to frequent errors and omissions in cross-modal retrieval, while the high cost of manual annotation makes it extremely difficult for enterprises to balance investment with accuracy.

Lagging Data and Industry Knowledge Updates

The constantly changing industry knowledge and data make traditional models prone to falling behind the latest industry standards and regulations.

Bottlenecks in Real-Time High-Concurrency Inference

Traditional AI models experience a doubling of peak requests during high-concurrency scenarios, leading to queue backlogs, interface timeouts, and downtime that directly impact revenue.

GTS AI Multimodal Application - Core Technology Highlights

Localized Private Engine for Data Protection

Utilizing a full-stack private image of OpenAI-GPT5 and Deepseek-V3, coupled with FIPS-140-3 encryption and a zero-trust architecture, the entire training and inference process can be completed within the customer's data center. Built-in compliance templates allow for one-click generation of audit working papers, eliminating the risks of public cloud data transfer and cross-border compliance.

Multimodal Data Integration and Seamless Fusion

The GTS team uses unified joint embedding to map text, images, audio, and video to the same vector space, enabling cross-modal retrieval and inference without requiring separate models for each format. It is plug-and-play for multiple industries, including finance and healthcare.

Distributed Framework for Elastic Scaling

The distributed computing framework slices hundreds of billions of parameters across 128 GPUs. Cloud-native technology enables efficient model training and inference using multi-GPU clusters. Combined with containerization and automated scheduling tools, computing resources are dynamically scaled up and down, resulting in a significant reduction in annual GPU hours and ROI cycle in real-world testing.

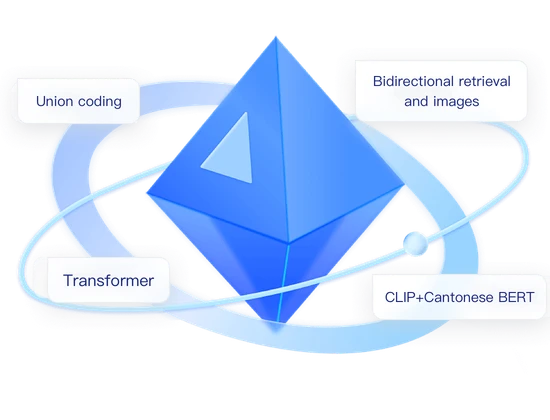

Unified Embedding of Joint Encoding and Inference Engine

Utilizing CLIP + Cantonese BERT joint encoding for cold start, followed by retraining with a unified Transformer, this approach overcomes traditional limitations, achieving higher-precision bidirectional retrieval and joint inference of images and medical record descriptions, reducing the annotation workload to 80%.

Federated Learning and Dynamic Knowledge Base Fine-Tuning

The GTS team employs federated learning and domain adapters, building its own intelligent search engine and API tools to achieve 30-minute hot updates and less than 2% loss of general capabilities, ensuring continuous business compliance without full retraining.

High-Concurrency Real-Time Inference

A GPU pool + dynamic batch processing + token-level streaming return solution is provided, with flexible load balancing and real-time resource scheduling to ensure stable system operation under high concurrency, meeting enterprise-level real-time inference requirements and enabling zero application downtime.

GTS offers customized AI multimodal large-scale model application development services for clients across all industries.

Enterprise-Level AI Model Application Development Services - Solutions

Risk of Factual Error

General models may produce factual errors, affecting translation accuracy.

Lack of Reliable Data Sources

General search engines cannot provide accurate data for specific fields.

High Industry Barriers and Poor Adaptability to General Products

The general model lacks sufficient understanding of specific industry knowledge.

Poor Cantonese Adaptability of General Models

The general model has limited support for Cantonese translation.

Agent-LLM Pipeline for Fact-First Translation

Real-time search for factual information, then hand it over to the LLM for translation, achieving a 97% fact-check accuracy rate in UAT.

Dedicated search engine for Better Data Quality

A custom-built search engine significantly improves the adaptability and accuracy of search data.

Dedicated knowledge base and API tools

A specialised knowledge base and APIs close the gap in the gaming industry knowledge that general models often lack.

Cantonese-Optimised Model Fine-Tuning

Organize the dataset, fine-tune the model, and optimize the workflow to greatly improve the quality of the model's Cantonese content output.

AI Multimodal Large Model Application - Function Matrix

Core Engine

Integrate industry-leading large language model services (including but not limited to OpenAI-GPT5 and Deepseek-V3) as a powerful translation foundation.

Model Fine-tuning

Deeply optimize the model using massive amounts of professional corpus to ensure accurate understanding and translation of professional terms such as competition rules.

Self-developed Intelligent Agent

A dedicated AI agent responsible for coordinating complex translation processes and executing key tasks.

Self-built Workflow Engine

An efficient and configurable engine to automate the processes of source text input, preprocessing, model invocation, post-processing, quality checking, and output.